Learned Multi-View Texture Super-Resolution

Capturing virtual 3D object models is one of the fundamental tasks of computer vision. Movies, computer games, and all sorts of future virtual and augmented reality applications require methods to create visually realistic 3D content. Besides reconstructing the best possible 3D geometry, an equally important, but perhaps less appreciated step of that modeling process is to generate high-fidelity sur-face texture. However, the vast majority of image-based 3D reconstruction methods ignores the texture component and merely stitches or blends pieces of the input images to a texture map in a post-processing step, at the resolution of the inputs.

In this project, we explore the possibility to compute a higher resolution texture map, given a set of images with known camera poses that observe the same 3D object, and a 3D surface mesh of the object (which may or may not have been created from those images). There are two fundamentally different approaches to image super-resolution (SR):

1. Redundancy-based multi-image SR: it uses the fact that each camera view represents a different spatial sampling of the same object surface. The resulting oversampling can be used to reconstruct the underlying surface reflectance at higher resolution in a physically consistent manner, by inverting the image formation process that mapped the surface to the different views.

2. Prior-based single-image SR: it aims to generate a plausible, visually convincing high-resolution (HR) image from a single low-resolution (LR) image, by learning from examples what HR patterns are likely to have produced the low-resolution image content. Also for this obviously ill-posed problem, impressive visual quality has been achieved, particularly with recent deep learning approaches. Clearly, single-image SR can only ever “hallucinate from memory”, since it is entirely based on prior knowledge. There is no redundancy to constrain the reconstructed high-frequency content to be physically correct.

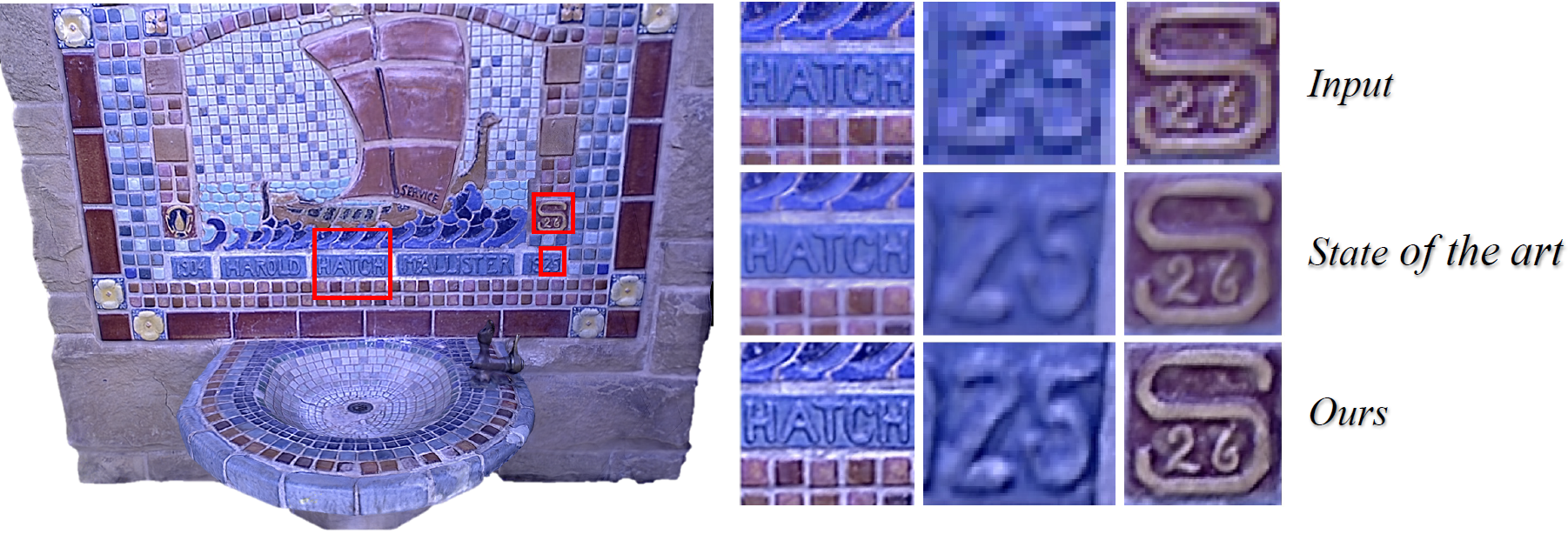

Each of these two approaches has been shown – separately– to work rather well on natural images. But the vast majority of existing work is limited to either one or the other. Our goal is to unify them both into an integrated learning-based computational model that combines their respective advantages. We propose to map the inverse problem (i.e. first SR principle) into a block of suitably designed neural network layers, and to combine it with a standard encoder-decoder network for learned single-image super-resolution (i.e. second SR principle).

We use two datasets of different types for the training of our network: a single-image dataset and a multi-view 3D dataset. Experiments demonstrate that the combination of multi-view observations and learned prior yields improved texture maps [1].

Publication:

[1] Richard, A., Cherabier, I., Oswald, M.R., Tsiminaki, V., Pollefeys, M., Schindler, K.: Learned Multi-View Texture Super-Resolution (PDF, 16.4 MB); Supplementary (PDF, 21.2 MB), 3DV Conference, Québec, 2019

Reference:

[2] Tsiminaki, V., Franco, J., Boyer, E.: High resolution 3D shape texture from multiple videos, CVPR Conference, Ohio, 2014

Contacts:

Audrey Richard,

Ian Cherabier,

Martin R. Oswald,

Prof. Konrad Schindler,