Automatic Drone Tracking and 3D Trajectory Reconstruction

The number of drones in the air is rising sharply as they are entering our everyday lives, fulfilling tasks such as surveying, package delivery, mapping, or filming as well as being used by hobbyists. Despite their popularity, little has been done so far to ensure the safety and effectiveness of drone operations. Several dangerous incidents have already happened such as the presence of a drone near the Gatwick airport that affected around a thousand flights and more than a hundred thousand passengers and caused tremendous economical damage. In order to ensure the safety and effectiveness of low-altitude air traffic, drone operations must be regulated and we must have systems to enforce those regulations. Although the exact form of those regulations and systems is still not clear, it is certain that being able to monitor the low-altitude air traffic will play a crucial role.

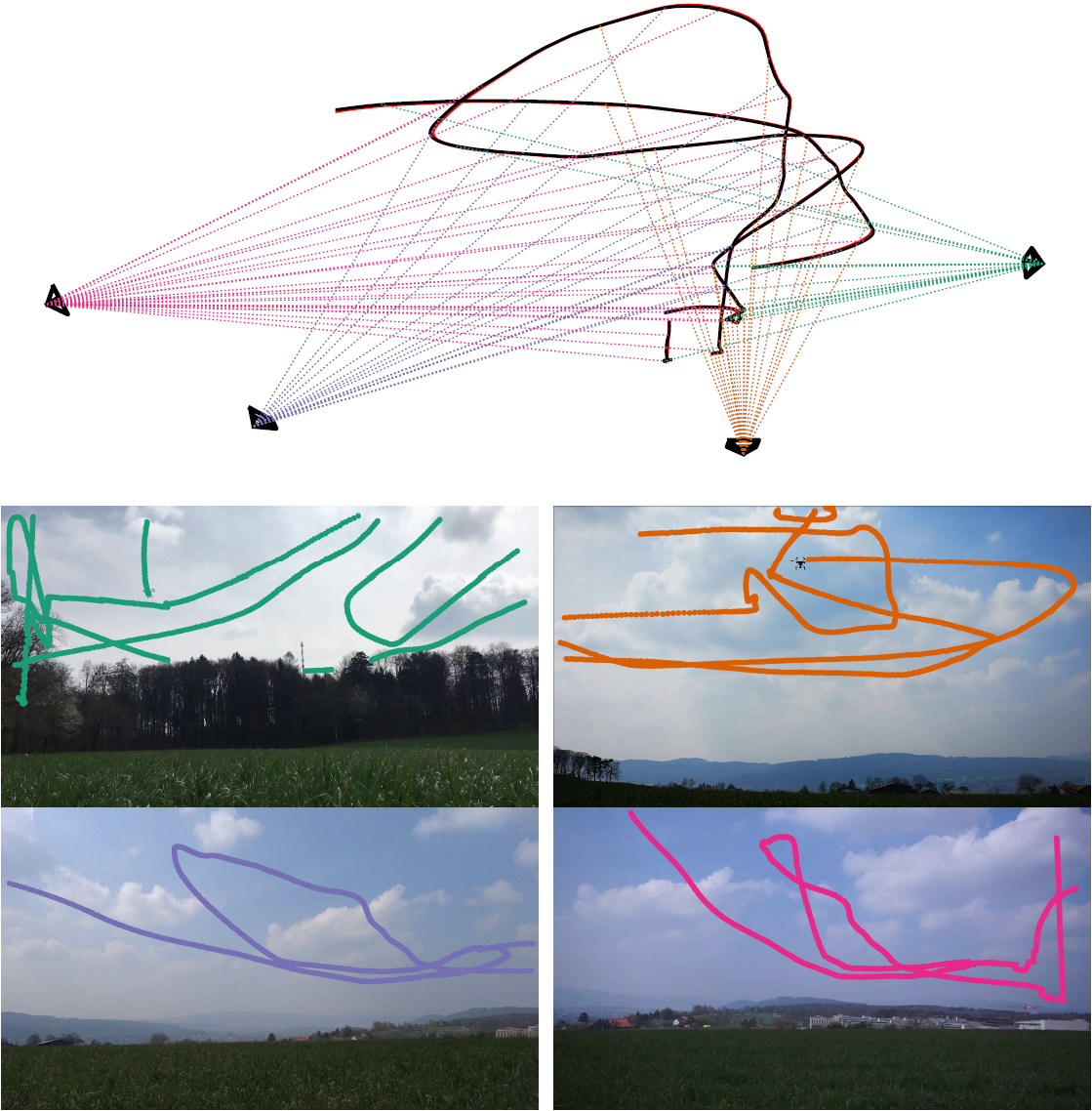

The aim of this project is to push the boundaries of the state-of-the-art in computer vision and machine learning in order to enable systems that monitor drone traffic, make it more effective and help to prevent accidents. We develop computer vision systems that are able to detect, track and reconstruct the 3D trajectories of drones automatically, in real-time, and with affordable hardware. Multi-camera networks are better suited for this task than other methods such as radars or sonars. They are orders of magnitude cheaper, can detect very small objects, visually distinguish the object type, are modular and easily extendable, and can cover areas with occlusions such as buildings. Last but not least they are low-power and do not emit any radiation. The challenges are automatic object detection, calibration, and synchronization.

Our initial results (J. Li et al. 2020) show that it is possible to automatically calibrate a large-scale outdoor camera network consisting of off-the-shelf cameras using just the detections of flying objects as data. We solve the synchronization problem by simultaneously estimating the geometry and the time shift and scale parameters. Once the initial camera geometry is computed, we reconstruct the 3D drone trajectory by modeling it using spline parametrization along with physics-based motion priors. The 3D tracking accuracy of such a system achieved several centimeters on an area of approximately a hundred by hundred meters.

The open problems and current focus are 1) the extension of our current method to tracking multiple drones and 2) automatic 2D detection and tracking of drones in image sequences. So far our focus was on the 3D part of the problem and the 2D detections were obtained semi-manually. Detection and tracking of drones are quite different from the classic recognition tasks such as detecting people and cars. Some of the challenges are for example the small size of the drone in the image and its very fast motion. Another challenge is night-time drone tracking in infrared imagery, which is usually with very small resolution. We are working on adapting current state-of-the-art detectors and trackers for the task of drone tracking.

Publication:

Li, J., Murray, J., Ismaili, D., Schindler, K., Albl, C.: external pageReconstruction of 3D flight trajectories from ad-hoc camera networkscall_made, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020

Contacts:

Cenek Albl ()

Konrad Schindler ()