Semantic 3D Reconstruction of Urban Scenes

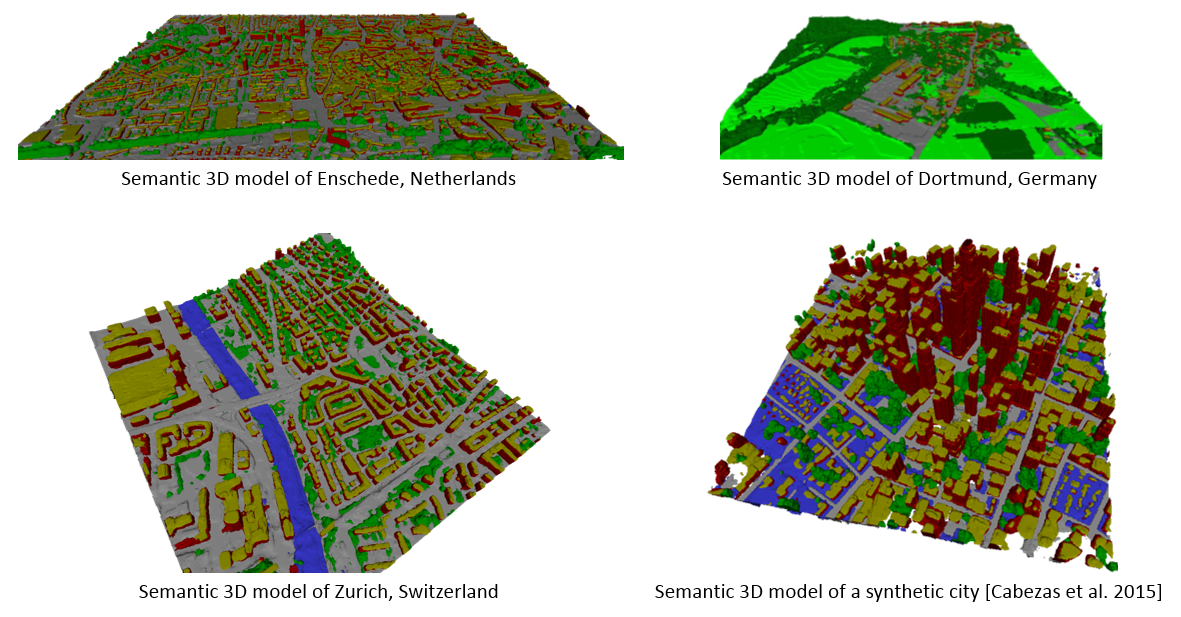

The goal of this project is the automatic generation of semantically annotated 3D city models from image collections. The idea of semantic 3D reconstruction is to recover the geometry of an observed scene while at the same time also interpreting the scene in terms of semantic object classes (such as buildings, vegetation etc.) - similar to a human operator, who also interprets the image content while making measurements. The advantage of jointly reasoning about shape and object class is that one can exploit class-specific a-priori knowledge about the geometry: on the one hand the type of object provides information about its shape, e.g. walls are likely to be vertical, whereas streets are not; on the other hand, 3D geometry is also an important cue for classification, e.g. in our example vertical surfaces are more likely to be walls than streets. In our research, we address this cross-fertilization by developing methods which jointly infer 3D shapes and semantic classes, leading to superior, interpreted 3D city models which allow for realistic applications and advanced reasoning tasks.

New Publications:

Richard A., Vogel Ch., Blaha M., Pock T., Schindler K.: Semantic 3D Reconstruction with Finite Element Bases, Supplementary, British Machine Vision Conference (BMVC), London, Great Britain, 2017

Bláha M., Vogel C., Richard A., Wegner J.D., Pock T., Schindler K.: Large-Scale Semantic 3D Reconstruction: an Adaptive Multi-Resolution Model for Multi-Class Volumetric Labeling, Supplementary, external pageOralcall_made, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016

Bláha M., Vogel C., Richard A., Wegner J.D., Pock T., Schindler K.: Towards Integrated 3D Reconstruction and Semantic Interpretation of Urban Scenes. In Dreiländertagung, D-A-CH Photogrammetry Meeting, Bern, Switzerland, 2016

Contact Details:

, , Maros Bláha