Large-Scale Deep Learning for Automatic Generation of Online Maps

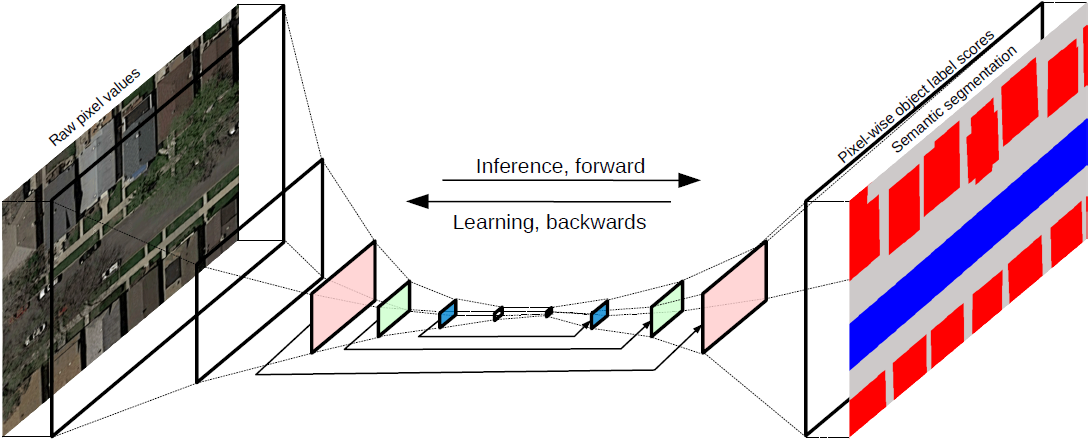

We are developing deep learning methods that use publicly available remote sensing and online map data to automatically generate new online maps. Although online maps (e.g., openstreetmap) do not as accurately match the aerial image content as ground truth that is labeled particularly for that purpose, it scales almost arbitrarily. We thus view existing maps as weak labels and allow for small mismatches because the hope is that the sheer amount of training data will compensate for small errors. This also allows us to quickly adapt to new environments because no time and money has to be spend on generating ground truth manually. In a first step, fully convolutional neural networks are used for pixel-wise semantic segmentation of aerial images downloaded from Google Maps. In the long term, we are aiming at avoiding this intermediate step towards map making. The idea is to directly infer vector representations of maps (i.e., points and lines that form polygons) and to come up with a deep learning architecture that is capable of training and inference on irregular graph structures (instead of regular grids like images). This would allow to learn long-range concepts like roads networks and would also be valuable for other data that do not follow regular grid patterns (e.g., laser scanners point clouds).

Representative Publications:

Kaiser, P.: Learning City Structures from Online Maps, Master Thesis, 2016.

Contact person: Jan Dirk Wegner, Aurélien Lucchi (ETHZ, Data Analytics Lab) and Martin Jaggi (EPFL)